In political debates, it’s common for participants to challenge one another to define what they mean by terms like “left” and “right.” That instinct is correct: a rational argument depends on shared definitions. If two people use the same word to mean different things, they are not really debating at all—they are talking past each other. When someone clarifies meanings, they are often accused of “playing semantics.” But semantics are essential to dialectical reasoning, whose goal is to produce light rather than heat, so that individuals can make rational decisions based on their interests and principles.

The problem in the case of left and right, however, is deeper than people usually realize. Left and right are not substantive political categories. They’re not metaphysical or ontological concepts. They have no inherent content—no fixed assumptions, axioms, or principles that define them. They are merely positional labels. By contrast, terms like liberal and conservative do have real substance. They refer to identifiable political philosophies with stable core commitments.

The origin of the left–right distinction makes this clear. During the French Revolution, liberals sat on the left side of the National Assembly, while conservatives—traditionalists, monarchists, and defenders of the ancien régime—sat on the right. From this accident of seating emerged a vocabulary that has been treated ever since as though it describes deep political realities. Except for the respective philosophical systems attached to them in any given place or at any given moment in time, these positional labels have no real transhistorical meaning.

At that moment in French history, liberalism was the revolutionary force. It opposed absolutism and hereditary authority; it championed constitutional government, freedom of conscience, individual rights, and legal (or formal) equality. Liberalism was labeled “left-wing” not because left had intrinsic meaning, but because liberalism was challenging the existing power structure and its proponents sat on the left. Once liberalism succeeded, however, it became the new hegemonic order. Capitalism was legalized and normalized, constitutional government replaced absolutism, and liberal principles became the foundation of the state. Liberalism moved from being the antithesis to becoming the thesis. Where people sat shifted; what they believed didn’t.

Liberalism is the thesis of the American Republic. The Declaration of Independence, the US Constitution, and the Bill of Rights are liberal documents. The fact that what we know as modern conservatism is, to a significant extent, substantively liberal in character doesn’t make liberalism conservative. No misuse of terms changes reality. It simply means that a synthesis has emerged that allows liberals and conservatives to forge a political coalition capable of reclaiming the American system from progressives, who have, in effect, abandoned all liberal principles. Liberals and conservatives remain distinct and have very real disagreements over matters of the role of religious faith in politics, but these disagreements do not prevent finding common cause concerning the existential threats to the American Republic.

Returning to the historic French situation, a new antithesis soon emerged: socialism. Socialists challenged liberal capitalism and liberal individualism in the name of social ownership, collective responsibility, and economic and social equality. A similar thing occurred in the United States, as well; here, it was associated with the emergence of progressivism, the ideology of corporate statism. And, to be clear, that’s what socialism was in France. The socialist vision of Frenchman Claude-Henri de Saint-Simon (whom many consider the real father of sociology, not his secretary August Comte) was really corporatist-technocratic, with elite experts and industrial leaders managing society rather than democratic worker control.

With the rise of corporatism, opposition to liberal capitalism was labeled “left-wing,” and liberalism, the dominant system, was increasingly described as “right-wing” because it sat conceptually to the right of socialism. This is why one finds liberalism identified as such around the world. America is the odd case because progressives dressed themselves as liberals—Teddy Roosevelt branding corporate statism as a “New Liberalism” (also a “New Nationalism”) by which he meant a break from classical laissez-faire liberalism toward a state that actively regulated corporations, promoted social welfare, and (ostensibly) protected workers. This is the scheme that his fifth cousin, Franklin Roosevelt, institutionalized in the New Deal, vastly expanding the reach of the state into the lives of citizens, negating the liberal principle of limited government. Progressives refer to themselves as liberals to this day. Many conservatives call progressives that, too. Both are wrong.

This history reveals the core problem. “Left” and “right” do not name coherent philosophies; they describe shifting power relationships within a given hegemonic order. Under the prevailing hegemony of progressivism, whatever ideology challenges that hegemony is labeled “right,” and whatever defends or advances it is labeled “left,” regardless of their substantive philosophical content. This produces a conceptual absurdity. Liberalism can be “left-wing” when it opposes monarchy, then “right-wing” when it opposes corporate statism, even though its principles remain unchanged. Protesters advocating a form of absolutism can then march with signs declaring “No Kings!” Likewise, what is called “socialism”—more accurately corporatism or social democracy—can be labeled “left-wing” while opposing liberalism, despite being philosophically hostile to liberal individualism, which was the philosophy of those who once sat on the left. The labels float free of substance and become markers of power position rather than ideology.

This also eliminates any conceptual space for a counterrevolution if the counterrevolution is defined merely as the hegemonic position, imagined or real. Reflexively, the counterrevolution is portrayed as “far right.” This is not wordplay when political antagonisms are rendered in principled sides. In yesterday’s essay, Between Corporate Hegemony and Popular Sovereignty: Donald Trump and the Bulwark of Populism, I explain that Trump and the populist-nationalist movement represent an insurgency against the prevailing hegemony of corporate statism. The movements to return America and Europe to their liberal foundations are identified as “reactionary.” This framework allows corporate-state propagandists to portray populism and nationalism as authoritarian, even fascistic, simply because they challenge progressive or social democratic hegemony rather than because of their intrinsic philosophical content. The progressive, today in rebellion against the US Constitution, cannot be reactionary because it is “left-wing,” a label that renders even terroristic violence immune from derogatories easily smeared on liberals and conservatives.

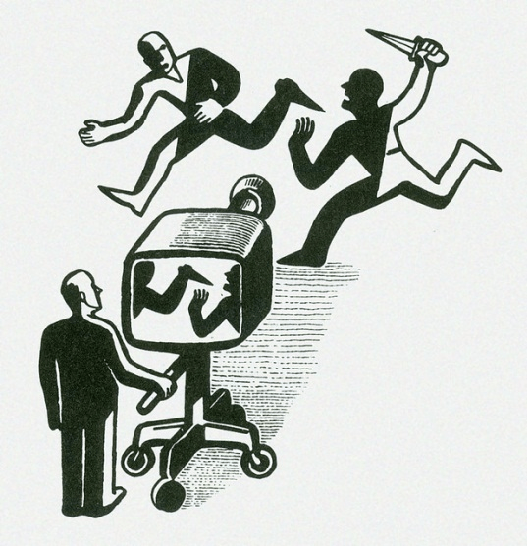

Conceptually, the left-right framework collapses under its own contradictions. However, in the practice of propaganda, the lack of intrinsic meaning only enhances the usefulness of the respective labels for those whose political function is to manipulate the public mind. Left and right are glittering generalities; a historical pecularity is repurposed and taken up as weapons in partisan warfare. If left and right were real ontological categories, they would have stable definitions. They would be grounded in enduring principles. But they are not used that way. Instead, they are elastic labels that stretch to include mutually exclusive beliefs, thus deceiving the public when it is advantageous to hegemonic power, while fracturing meaningful and effective coalitions built upon the cogent syntheses of philosophical systems.

To be sure, there is peril in the liberal-conservative coalition. What we identify historically as right-wing is a constellation of beliefs—hierarchy, patriarchy, religious authority, and traditional social structures—that is, traditional conservatism. This was the worldview of those who sat on the right of the French National Assembly. Today, millions of Americans still identify with these ideas. Liberalism—commitment to free speech, constitutional limits, equality before the law, and individual rights—stands in contrast to traditional conservatism, even if it is now also defined as right-wing around the world. Obviously, these positions are antithetical when distilled into rigid substances; they cannot coherently belong to the same category.

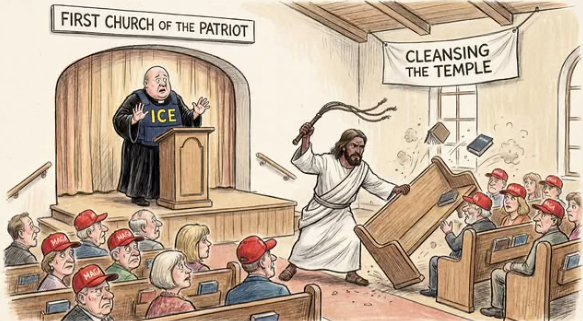

To the extent that those in the contemporary conservative movement regress to the belief-constellation of traditional conservatism, the liberal-conservative coalition fractures, a schism reinforced by the perception on that side that liberals are also progressives. This confusion speaks to the vital importance of reclaiming liberalism from the progressive distortion—to expose progressivism as the negation of liberalism, and reveal to the modern conservative that he is really a liberal, even if he believes in hierarchy and traditional values, which are also familiar to liberals. After all, both sides accept the reformulation of hierarchy as emergent inequality based on competition and meritocracy. Moreover, apart from a noisy Christian nationalist minority, the desire to see Christian ethics remain at the heart of the moral system of Western Civilization persists as a shared commitment. Indeed, in the face of critical race theory, queer praxis, and Islamization, liberals have become eager to join conservatives in reasserting America’s foundational ethics.

Clarifying these matters is not pedantic. The usage of terms cannot be rationalized by noting as a trivial fact that their meanings change over time. Words either accurately and precisely refer to reality, or they become weapons of manipulation (as one sees in the repurposing of the word “gender” by those who mean to confuse the gender binary and the fact of its immutability). George Orwell warned the West at every turn—in his novels Animal Farm and Nineteen Eighty-Four, and in his seminal essay “Politics in the English Language”—about the consequences of repurposing language for political purposes. Liberalism has a fixed meaning. It refers to a specific philosophical tradition centered on formal equality, freedom of conscience and speech, individual liberty, limited government, property rights, and the rule of law. Those principles do not change simply because political coalitions shift. Liberalism is something. Left and right, by contrast, are not.

I have described myself as a liberal my entire adult life, and I once thought of myself as being “on the left.” In retrospect, that was a mistake. I sometimes still make this mistake out of habit—and, admittedly, out of concern that being labeled as “right-wing” delegitimizes my arguments. Indeed, this is the function of positional terms and the reductive checklists based on them: to dismiss out of hand the arguments of one’s opponent. “That’s a right-wing argument” becomes the equivalent of plugging one’s ears and rehearsing progressive or socialist talking points. What I really mean when I describe myself as a “man of the left” is that I support liberal principles, not that I belong to a coherent “left-wing” ideology. Once progressives abandoned liberalism altogether, they left me standing to their right. “What happened to you?” they asked, as if something actually had. The real question is, what happened to them? Why did they abandon the liberal principle? Why did they follow a term with no substantive meaning?

As I have endeavored to explain, liberalism has been redefined in the American spaces as progressivism. I reject that equation. And so should you for the sake of objectivity. Liberalism and progressivism are not the same thing; they are, for the most part, opposites—at least opposite enough to be incompatible. Progressivism embraces forms of administrative authority, collectivism, identity-based politics, and speech policing that liberalism explicitly rejects. When people like me (and there are many of us) say, “I used to be on the left, but the left has lost its mind,” what we mean is that liberalism has been displaced on the left as originally understood by progressivism, while still wearing the liberal label. I’m not abandoning liberalism. I’m refusing to follow words wherever they happen to wander. Indeed, I am more liberal now than I have ever been—and that is why I’ve changed my mind on some issues. To my progressive family and friends, that makes me a “right-winger.” It’s as if they never actually listened to me, but instead assumed tribal membership. In that respect, I understand their astonishment.

For these reasons, I no longer describe myself as left or right. This is not an attempt to obscure my politics by rejecting positional labels. Rather, this is a clearing out of the tangle of glittering generalities that obscure my moral and philosophical commitments. Those labels discourage reflexive thinking by encouraging tribal habituation, collapsing distinctions between incompatible ideas, and making rational debate harder, not easier. They put rings in noses (sometimes literally) and lead the herds with invisible reins. Political philosophy should be discussed in terms of what people actually believe: conservatism, liberalism, progressivism, socialism, etc. These are meaningful categories. Left and right are not. I made the mistake in the past of identifying with a side and not a set of principles. Not totally (which is what allowed me to escape), but embarrassingly enough. I will do my best to not do that anymore. I am a liberal—not because I sit on one side of a shifting political spectrum, but because I affirm a tradition with a clear, stable, and defensible philosophical core.