In his Davos address yesterday, President Donald Trump offered a sharply critical assessment of Europe, arguing that the continent is “not heading in the right direction” and that parts of it have become “not recognizable” in recent decades. He attributed Europe’s economic and social difficulties to a mix of policy choices—especially expanding government spending, green-energy priorities, and what he called “unchecked mass migration”—and contrasted this with what he described as an American economic resurgence under his leadership.

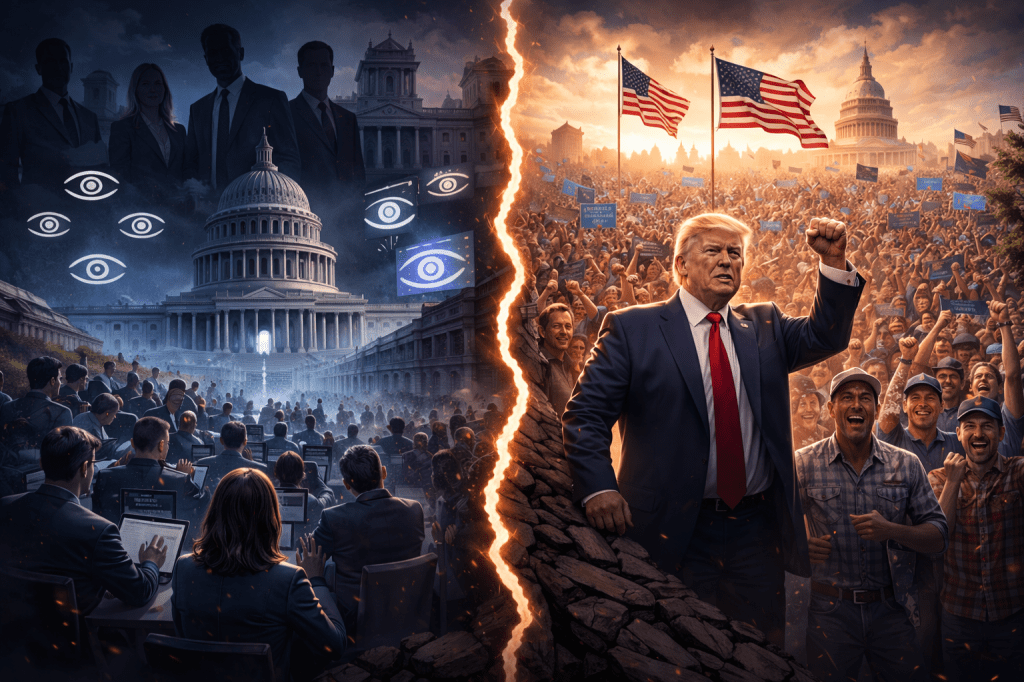

Trump framed large-scale immigration as economically and socially disruptive, contending that importing new populations had undermined growth, living standards, and social cohesion across the West, while insisting that tighter borders, cultural integrity, and a move away from transnationalism and a return to traditional economic policies were central to restoring prosperity, stability, and Western identity. This is what global elites did not want the working classes of the Western nations to hear. This is why those elites are in a panic: populist-nationalism is on the rise, and the fascistic apparatus of the corporate state has failed to contain it.

Have you ever wondered why the machinery of the corporate state—the academy, the administrative bureaucracy, the culture industry, legacy media, the judiciary, and the donor networks of both major parties—reacted with such alarm when Donald Trump rose to power? Trump was a media darling before the dramatic moment in June 2015 when he descended the gilded escalator in Trump Tower to announce his first presidential campaign. For decades, they had asked him when he was running for President. Now he was, and it was the worst fate to befall the world since the appearance of Adolf Hitler. In fact, it was exactly that. A switch was flipped, and millions of people lost their minds.

These institutions mobilized to label Trump’s policies as authoritarian and fascist, even as previous presidents, such as Barack Obama, were able to expand executive power (or, more accurately, more fully exercise the inherent powers of the office), pursue foreign interventions, and carry out mass deportations of illegal aliens, with little public fanfare or moral condemnation. The difference is not in the legality or scale of the actions themselves, but in their alignment with the corporate state. Trump’s populist agenda, backed by mass political support outside the elite consensus, threatens the carefully managed hegemony that sustains the corporate state, provoking a coordinated pushback from every institutional channel that protects it.

This essay, synthesizing analyses and arguments presented over several years on this platform, explores how the structure of the US federal government, while embedding deep corporate influence across culture, administration, and law, nonetheless preserves enough democratic mechanisms to allow such an outsider as Trump to govern—albeit precariously and under constant institutional resistance. Readers must understand that, while the Founders separated powers to establish a government resistant to fascist formation, the scheme requires a strong national leader and a movement of determined patriots who believe in the American system to fight the corruption of elite power that threatens that separation.

The contemporary United States is best understood not as a fully pluralistic democracy, but as a regime in which real governing power is exercised by what can be described as a corporate state. This corporate state is composed of large corporations and financial interests, the donor class embedded in both major political parties, legacy media institutions such as linear television and radio, the culture industry of film, music, and publishing, public education and the modern academy, the permanent administrative bureaucracy, and substantial portions of the judiciary.

These institutions need not conspire explicitly to act in concert; their unity emerges organically from shared career pathways, ideological assumptions, material incentives, and professional norms. Indeed, the situation is to a significant extent the result of a convergence of interests, as well as structural inertia. These streams form a coherent governing class whose interests and worldview dominate public life. But Leviathan is also the result of elite machinations—the transnational corporate agenda manifest in organizations like the World Economic Forum.

This arrangement is what we call corporatism, a defining structural feature of fascist systems, in which nominally private institutions are functionally integrated into state power. Political authority is exercised not primarily through elected representatives accountable to voters, but through a dense web of bureaucratic, cultural, and managerial forces and personalities that operate beyond direct democratic control.

While Europe is almost lost, America differs from the consolidating fascism of European history in a critical respect: it cannot as of yet permanently close itself off from popular participation. This is the genius of the founders’ design of the American system. Constitutional requirements such as federalism, regular elections, and separation of powers compel the regime—with strong leaders and engaged patriots who love the Republic—to preserve democratic processes, even if those processes are constrained and steered by emergent structures antithetical to a democratic republic.

Before Trump, the system could maintain the appearance of democracy while limiting the scope of popular influence. After its marginalization in the wake of the Great Depression, the Republican Party, established in the previous century to rejuvenate the American system and liberate the South from its backwardness, came to play a central role in suppressing the popular will. For most of the postwar period, it functioned as an institutional intake valve for dissent, absorbing popular frustration with elite governance and redirecting it into safe, controllable channels. Bureaucratic resistance, donor influence, and party discipline ensured that this dissent did not translate into fundamental challenges to the corporate state. Republicans so inclined are often referred to as RINOs—Republicans in name only. When RINOs control the Republican Party, the party’s role is controlled opposition.

When the Democratic Party governs, however, the system approaches de facto one-party rule with gusto. During periods of Democratic control of the executive branch, the corporate state’s major elements—academia, administrative agencies, culture, media, and much of the judiciary—align almost entirely with the governing party. Appealing to the false doctrine of agency independence, bureaucratic agencies exercise maximal autonomy, insulated from electoral accountability, while judicial interpretation increasingly reflects the progressive consensus.

Beyond the forces of campaign finance and corporate lobbying, beyond the administrative state and regulatory capture, beyond the judiciocracy, ideology plays a major role in shaping the popular sphere. Opposition voices are not merely contested but delegitimized, framed as immoral, irrational, even dangerous. They are censored, deplatformed, marginalized—even targeted by the weapons of lawfare.

Under these conditions, nearly all substantive elements of fascism are present: the fusion of corporate and state power, ideological conformity enforced through cultural authority, governance by managerial elites, and the marginalization of opposition rather than popular sovereignty. What remains absent is permanence—because elections still exist. To be sure, the efficacy of elections in conveying the popular will can be weakened by ceding sovereignty to transregional and transnational institutions and relations, as we see in the case of the European Union, and to some extent, in the American case. The republican institutions of the United States remain robust in comparison. Yet our status as one of the few democratic societies in the world is in jeopardy.

This robustness explains how somebody like Donald Trump can ascend to the White House. Trump and the populist movement represent a genuine threat to the antithesis of the corporate state. This is not because Trump opposes capitalism as such—he is himself one of the more successful entrepreneurs in history—but because he rejects elite managerial control, globalized economic priorities that subordinate national interests, cultural authority monopolized by elite institutions, and bureaucratic governance detached from voters that is the administrative state. Trump’s political power derives directly from mass democratic support rather than institutional endorsement, which makes him uniquely threatening to a system designed to manage and contain popular influence.

The aggressive reaction to Trump and populism follows logically from this threat. The culture industry and legacy media saturate the public sphere with negative framing and moral condemnation, shaping public perception and narrowing the bounds of acceptable discourse. The academy supplies intellectual justification for exclusionary practices and extraordinary measures. The administrative state delays, obstructs, or nullifies policy through procedural resistance, while the judiciary constrains executive authority through expansive and selective interpretations of law. This resistance does not require a centralized conspiracy; it is the predictable self-defense of an entrenched ruling order seeking to preserve—and reestablish—its hegemony. The desire for a New World Order is not whispered in corners. They tell us who they are and what they want

Populism can govern at all only because democratic mechanisms have not been entirely dismantled. When an outsider like Trump captures the Republican Party, which is more open to mavericks than the Democratic Party (which isn’t really open to any, as we saw with the marginalization of Bernie Sanders and Robert Kennedy, Jr.), and is backed by a broad social movement (MAGA), the Common Man can override donor influence and compel even reluctant Republicans, including establishment figures, to align with the movement to remain electorally viable. However, such governance is fragile; it operates under constant constraint from administrative, cultural, and judicial power centers that remain outside popular control. Even members of Trump’s party want to move on from him. The RINOs are desperate to get back to the status quo. This is why they resist leading Congress to codify the American First agenda.

Every American election must be understood not merely as a contest between two parties, but as a struggle between corporate state hegemony and popular sovereignty. Trump and the patriots who stand behind him represent the movement of the Common Man. This is the leader the working class has been waiting for. They watched the First Family descend that golden escalator with entirely different eyes. Yet, while the people can assert their will electorally, that will is immediately checked, constrained, and filtered by non-electoral institutions, the institutions of elites who have a different plan for America—they mean to make America go away. Democracy exists, but only as a contested space rather than a governing principle.

Put simply, permanent fascism in America is prevented not by elite restraint or glittering generalities about democracy, but by the incomplete closure of the system, buttressed by constitutional structure, and enforced by mass participation. All of this is held together by what remains of republican virtue. As we celebrate our 250 years as an independent nation, patriotism is as much of an imperative today as it was in 1776. We cannot allow Democrats to retake power on November 3. Vote like Donald Trump is on the ballot. Even if your Representative or Senator is a RINO, punch his ticket. We are the bulwark against permanent fascism.