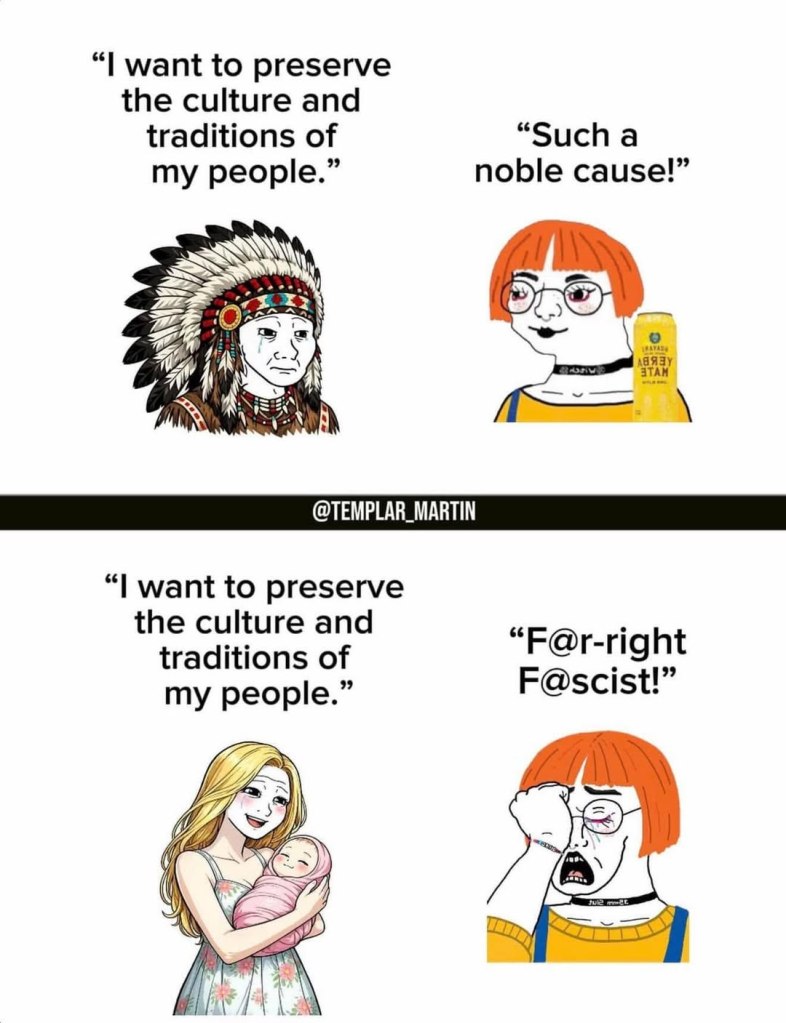

I came across a meme today that highlighted a striking double standard. On the left side of the top panel, there’s a man in a headdress essentially saying, “We just want to preserve our culture and traditions of my people.” On the right, a person with blue hair, piercings, and all the rest of it responds, “Oh, that’s so noble.” Below that, the left panel shows a white mother and her child saying the same thing as the headdress man: “We just want to preserve our culture and way of life.” But on the right, the blue-haired person replies, “Far-right fascist!”

It’s a disturbing cartoon (which is pre-bunked by the claim that the post originates with a racist content maker) that speaks to something deep about what’s happening in society. Why can’t native white people make the same claim that American Indians can? How was it even possible for a few boats crossing the Atlantic to colonize and take over an entire continent? How is it that a word black people use all the time is forbidden for white people to use? Why is free speech constrained by a particular frame regulating racial matters?

As the Hodge Twins observe, minority groups in America—and immigrants—want power. Not all of them (maybe not most of them), but their leaders do, at least from what we see. They can’t use force against the majority, since they’re outnumbered. So, they use rhetoric to delegitimize the majority and advance their goal of undermining cultural and national integrity. Immigrant advocates can argue that assimilation is an attempt to destroy their culture, and they use that to remain in ethnic enclaves that provide a base for power centers that erode the majority culture and weaken it from within.

But whatever rhetorical power these groups have, it must come from outside the group itself. If whites were truly far-right fascists and colonizers who established white supremacy, why would they walk on eggshells, avoiding anything deemed offensive? Why would they allow resistance to assimilation? Why wouldn’t they force it?

White people and minorities who stand with them have been put in a bind: When they want to preserve their majority and culture, it’s portrayed as illegitimate, while minorities’ desire to preserve theirs is seen as legitimate—even though it’s an obvious contradiction. Whites would like for them to assimilate and become part of the mainstream, to become American. That’s a positive move. They’re not trying to erase people; they’re trying to welcome them into the country as countrymen. Their home cultures remain intact. If they want to live by those values, why come here? It’s the people who don’t want America to exist who resist becoming American. That makes it colonization.

But again, there must be some other power at play. One of the tricks the small number of men who crossed the Atlantic used so many centuries ago was to find members of the indigenous population willing to work with them, forming coalitions to expand their numbers. These are called colonial collaborators—enemies within who join with the invaders. We see this today with progressivism. Minority leaders will say America is a piece of shit. Since they’re smaller in number, it shouldn’t matter—except that many other Americans join them and agree: “You’re right, America is a piece of shit.” Tens of millions of Americans hate America. They are conditioned to hate America.

But that’s still not enough to explain it, because we must further ask: Why would Americans do this? Who (dis)2organized them this way? It’s different from the colonization of the Americas, where there was no nation-state—just tribes at war with each other. It was easy to fracture them and ally with one to wipe out another. To be sure, the United States, when built, had its internal rivalries—the biggest being the Civil War. Then, during mass immigration in the late nineteenth and early-twentieth centuries, groups came in and created ethnic enclaves, leading to gangs and other problems—and most importantly, the disorganization of American culture, which progressives promoted with the idea of cultural pluralism, what today we know as multiculturalism. But the patriots stood up and cut off immigration and built a unified nation, keeping whatever powers were above us from dividing us.

That lasted several decades, but then they opened the borders again, bringing in people from radically different cultures. They organized the colonial collaborators—the progressives who hated the nation anyway—and said, “Join with these minority groups.” I’m thinking about this today because I saw another meme: “You want lower rents? Deport 20 million illegals. You want cheaper healthcare? Deport 20 million illegals. You want cheaper auto insurance?” And so on. Every line makes perfect sense from basic political economy. Yet cities across the United States say, “You can’t come in and deport the illegals who are destroying our quality of life, fracturing our culture, diminishing the majority, and refusing to assimilate”—unlike the previous waves of immigrants brought in for the same ends. Cultural pluralism was rebanded as multiculturalism. Old wine in new bottles.

The question of who is key. It’s not a conspiracy; this is the biggest project any group could pursue. You couldn’t hide something that big—others know who’s behind it. You can clearly see the Democrats are behind it, but where did they get their power? When Bernie Sanders was asked about open borders in 2015, he said, “That’s a right-wing idea, a Koch brothers’ idea. That’s terrible for working people.” He was right. He doesn’t make that argument anymore because he’s become a colonial collaborator. Every man has his price, they say—and while I don’t think that’s always true, it was for Bernie.

But go back to what he said before he was corrupted: He told the truth about who’s doing this. Not just the Koch brothers, but big capitalist powers and big finance, of which the Kochs are a part. They say it openly sometimes, but other times code it in terms of compassion and empathy—emotional blackmail to shame kindhearted people (which is most of us) into compliance. A lot of people walk around thinking, “Oh my God, wouldn’t it be great if… but I don’t want to look like an asshole” or “We’re all human beings,” blah blah woof woof.

Once you realize it’s transnational corporations and big finance, ask yourself: Do I have interests in common with them? I’m just a working person trying to live in a safe neighborhood, maybe own a house, and save for retirement. I just want my kids to grow up happy, get married, and have children so I can have grandchildren. You know, all the good and normal things. And I want to worship God—or not—if I choose. But I know I don’t want that god. I don’t want people who hate dogs, blast calls to prayer throughout my city, or make women dress in garbage bags. But I’m such a piece of shit for not wanting that. I won’t be a good person unless I let these colonizers run over me.

Make no mistake: These are colonizers, sent by big economic powers. Those powers control the Democratic Party, which is trying to establish a one-party state to open the borders and flood the country. They’re doing it in Europe too. Why are so many people engaged in collective civilizational suicide?

Anyone with eyes wide open can see this. I’m watching Europe being Islamized and lost to multiculturalism. I look at the United States—we’re not as far down that road, but we’re headed there—and I think, how can we let this happen? Why would the people allow it? The fact is, tens of millions of Americans don’t want it, but arrayed against them are the university system, public education, the culture industry (all of Hollywood and the rest of the entertainment Goliath), the mass media, and the administrative state—operating by rules developed by a permanent political class that’s part of this project.

The patriots live mostly in rural communities and small towns; they don’t control the cities where the action is. They control most of the counties, but that’s outside the cities. They’re a big number and could rise, but if they do, they confirm what’s said about them in that meme: “You’re a fascist, far-right.” If people rise to save their nation, they automatically prove the false claim that they’re what the destroyers say they are. Every hegemonic institution conveys this false reality, sucking tens of millions into an illusion that allows the country to be destroyed. This is why patriots need to stop worrying about being called racist, nativist, or fascist. By the progressives’ terms, the Founding Fathers were racist, nativist, and fascist—and indeed they say that as part of their propaganda to destroy the nation: “This country rests on a white supremacist foundation. It’s racist.”

A black man who had learned the truth, left the Democratic Party, and became a Republican, asked a protester, “Who was the first Republican president?” The protester didn’t know. The black man said, “Abraham Lincoln.” The protester looked confused. Abraham Lincoln was a Democrat, right? The Democrats fought against the Confederacy and freed the slaves. Republicans were the racists. That’s what he was thinking. You could see the confusion because many believe this inverted history. He even falsely believed that Lincoln owned slaves. The fact that Lincoln was a Republican and Republicans did all that gets memory-holed. As the conversation continued, the man couldn’t figure it out—he never tested his beliefs against external reality or objective history. What he believed was tribal, reinforced by a group with an inverted view of history and current events, making him resistant to truth. He moves with his eyes wide shut.

This hegemonic project has gone on long enough to produce tens of millions of zombies who will destroy their own country, believing patriots are the fascists and that welcoming non-assimilating people makes them anti-racist. They will destroy the country, believing that they are the good guys. The very idea of nationalism—having an integral nation-state—sounds like fascism to them. Assimilation—integration, welcoming people to become part of our culture (“out of many, one”)—sounds racist: “You want to destroy their culture, erase them.” Well, they could stay where they came from. If they come here, they become part of our culture. Why come to America if you don’t want an America to come to?

Think about the reverse: Suppose white immigrants decided to go to some Sub-Saharan African country. First, black Africans might say, “We don’t want white people here”—their prerogative; there is no right to migrate to another country. Progressives would cry, “Why are white people colonizing a black African country?” Not, “They’re seeking a better life; why are black Africans so mean? Bunch of racists.” No progressive would say that because it’s not part of the mind-space they’ve been indoctrinated into. Suppose black Africans said, “Okay, become part of our country—adopt our culture, learn our language, you’re welcome.” If whites said, “No, we want our own enclave, our own power centers, to change your laws,” The black Africans would say, “That’s not going to work.” Would whites call them racist? See how it doesn’t work outside the ideological frame? When you step outside the frame, it doesn’t make sense. You can see things clearly. This is why you test your ideas and beliefs against independent reality and objective historical narrative.

The double standard exposed in that “racist meme” isn’t accidental—it’s mocking the engineered outcome of a long-term ideological and economic project designed to fracture national cohesion and replace sovereign peoples with atomized, controllable populations. What began as colonial tactics of divide-and-conquer has evolved into a modern form of colonization, where the tools are no longer muskets and alliances with warring tribes, but mass migration from incompatible cultures, relentless propaganda through education and media, and economic incentives from transnational capital that profits from cheap labor, suppressed wages, and eroded social solidarity.

The bind I describe—where preserving one’s culture is branded “fascist” while minorities doing the same is celebrated—is a see core mechanism of this project. It weaponizes guilt, shame, and the fear of social ostracism to paralyze resistance. Patriots are trapped: speak up, and you’re labeled the very thing the propaganda claims you are; stay silent, and the demographic and cultural transformation accelerates unchecked. Yet history shows that empires and nations fall not from external force alone, but when internal collaborators—whether indigenous elites in the past or today’s progressive class—lend legitimacy to the invaders’ cause.

We can know the drivers of the project because we can see them: big finance and corporations that benefit from open borders (as Bernie Sanders himself once admitted in 2015), coded now in the language of diversity, compassion, and human rights to emotionally blackmail the well-meaning. The Democratic Party apparatus, the administrative state, academia, Hollywood, and corporate media form the hegemonic machinery that inverts reality—turning assimilation into “erasure,” nationalism into “supremacy,” and self-defense into “hate.”

But here’s the turning point we must seize: this illusion is cracking. In the United States as of early 2026, the second Trump administration has already deported hundreds of thousands, prompted millions more to self-deport, and slashed unauthorized border arrivals to historic lows through aggressive enforcement and expanded detention. Resources are shifting toward American citizens—lower strain on housing, healthcare, and wages in affected areas—proving the political economy I highlighted in that second meme isn’t abstract theory; it’s delivering tangible relief. The cities and blue enclaves that once resisted are increasingly isolated as federal power reasserts itself.

Europe’s trajectory is grimmer: projections show Muslim populations continuing to rise sharply (e.g., toward twenty percent in Germany, higher percentages in Sweden by mid-century), with visible cultural shifts in major cities fueling debates over Islamization and multiculturalism’s failures. Yet even there, populist pushback grows, as ordinary people reject the narrative that preserving their heritage is illegitimate.

The zombies I describe—those indoctrinated into tribal inversions of history, blind to objective reality—will always exist in large numbers under sustained propaganda. But they don’t need to win. Patriots don’t need universal approval; they need resolve. Stop internalizing the slurs. The Founding Fathers built this nation without apology for their identity or purpose. Lincoln preserved the Union against division. If defending borders, enforcing laws, and expecting newcomers to adopt the American way makes one a “fascist” by progressive standards, then let them sling the mud—because the alternative is surrender.

Test everything against reality: step outside the frame, reverse the scenarios (whites demanding enclaves in Sub-Saharan Africa or elsewhere), and the hypocrisy collapses. The project relies on that frame staying intact. Break it by speaking plainly, organizing locally, supporting enforcement, and refusing shame. The country isn’t lost yet—it’s waking up. They’re trying to tell you that they’re dreaming, but you are awake now. The question isn’t whether the destroyers can be stopped; it’s whether enough patriots will act before the illusion becomes an irreversible demographic fact.

Preserve the nation not out of hate, but out of love for what it was built to be: a unified people—out of many one—under laws, language, and values that made it exceptional. If we fail to defend that, there will be no America left for anyone to come to and assimilate into. The time for walking on eggshells is over. The time for clarity and courage is now.